Analyzing Stack Overflow data with ClickHouse

This dataset contains every Posts, Users, Votes, Comments, Badges, PostHistory, and PostLinks that has occurred on Stack Overflow.

Users can either download pre-prepared Parquet versions of the data, containing every post up to April 2024, or download the latest data in XML format and load this. Stack Overflow provide updates to this data periodically - historically every 3 months.

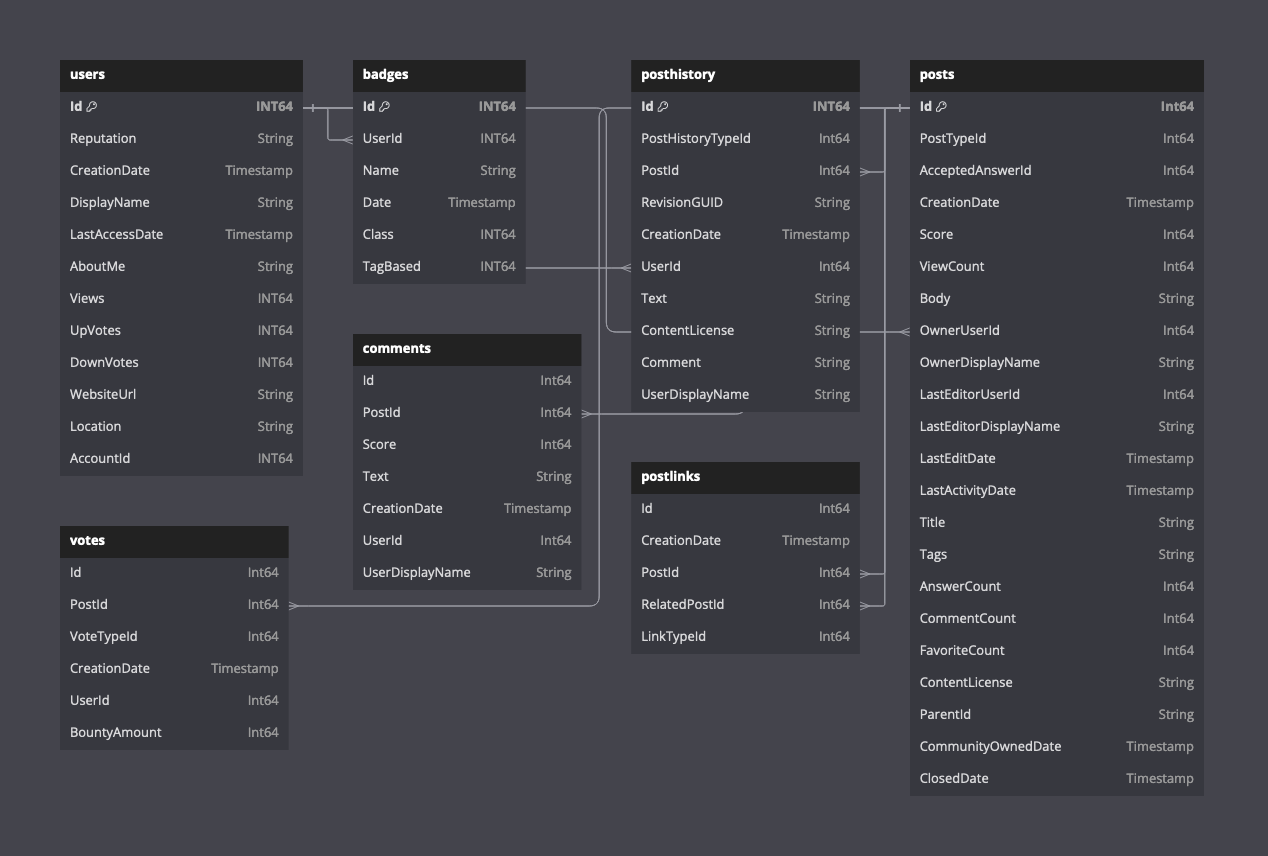

The following diagram shows the schema for the available tables assuming Parquet format.

A description of the schema of this data can be found here.

Pre-prepared data

We provide a copy of this data in Parquet format, up to date as of April 2024. While small for ClickHouse with respect to the number of rows (60 million posts), this dataset contains significant volumes of text and large String columns.

The following timings are for a 96 GiB, 24 vCPU ClickHouse Cloud cluster located in eu-west-2. The dataset is located in eu-west-3.

Posts

Posts are also available by year e.g. https://datasets-documentation.s3.eu-west-3.amazonaws.com/stackoverflow/parquet/posts/2020.parquet

Votes

Votes are also available by year e.g. https://datasets-documentation.s3.eu-west-3.amazonaws.com/stackoverflow/parquet/posts/2020.parquet

Comments

Comments are also available by year e.g. https://datasets-documentation.s3.eu-west-3.amazonaws.com/stackoverflow/parquet/posts/2020.parquet

Users

Badges

PostLinks

PostHistory

Original dataset

The original dataset is available in compressed (7zip) XML format at https://archive.org/download/stackexchange - files with prefix stackoverflow.com*.

Download

These files are up to 35GB and can take around 30 mins to download depending on internet connection - the download server throttles at around 20MB/sec.

Convert to JSON

At the time of writing, ClickHouse does not have native support for XML as an input format. To load the data into ClickHouse we first convert to NDJSON.

To convert XML to JSON we recommend the xq linux tool, a simple jq wrapper for XML documents.

Install xq and jq:

The following steps apply to any of the above files. We use the stackoverflow.com-Posts.7z file as an example. Modify as required.

Extract the file using p7zip. This will produce a single xml file - in this case Posts.xml.

Files are compressed approximately 4.5x. At 22GB compressed, the posts file requires around 97G uncompressed.

The following splits the xml file into files, each containing 10000 rows.

After running the above users will have a set of files, each with 10000 lines. This ensures the memory overhead of the next command is not excessive (xml to JSON conversion is done in memory).

The above command will produce a single posts.json file.

Load into ClickHouse with the following command. Note the schema is specified for the posts.json file. This will need to be adjusted per data type to align with the target table.

Example queries

A few simple questions to you get started.

Most popular tags on Stack Overflow

User with the most answers (active accounts)

Account requires a UserId.

ClickHouse related posts with the most views

Most controversial posts

Attribution

We thank Stack Overflow for providing this data under the cc-by-sa 4.0 license, acknowledging their efforts and the original source of the data at https://archive.org/details/stackexchange.